Exploring Cache-Augmented Generation (CAG): A New Frontier in Language Model Enhancement

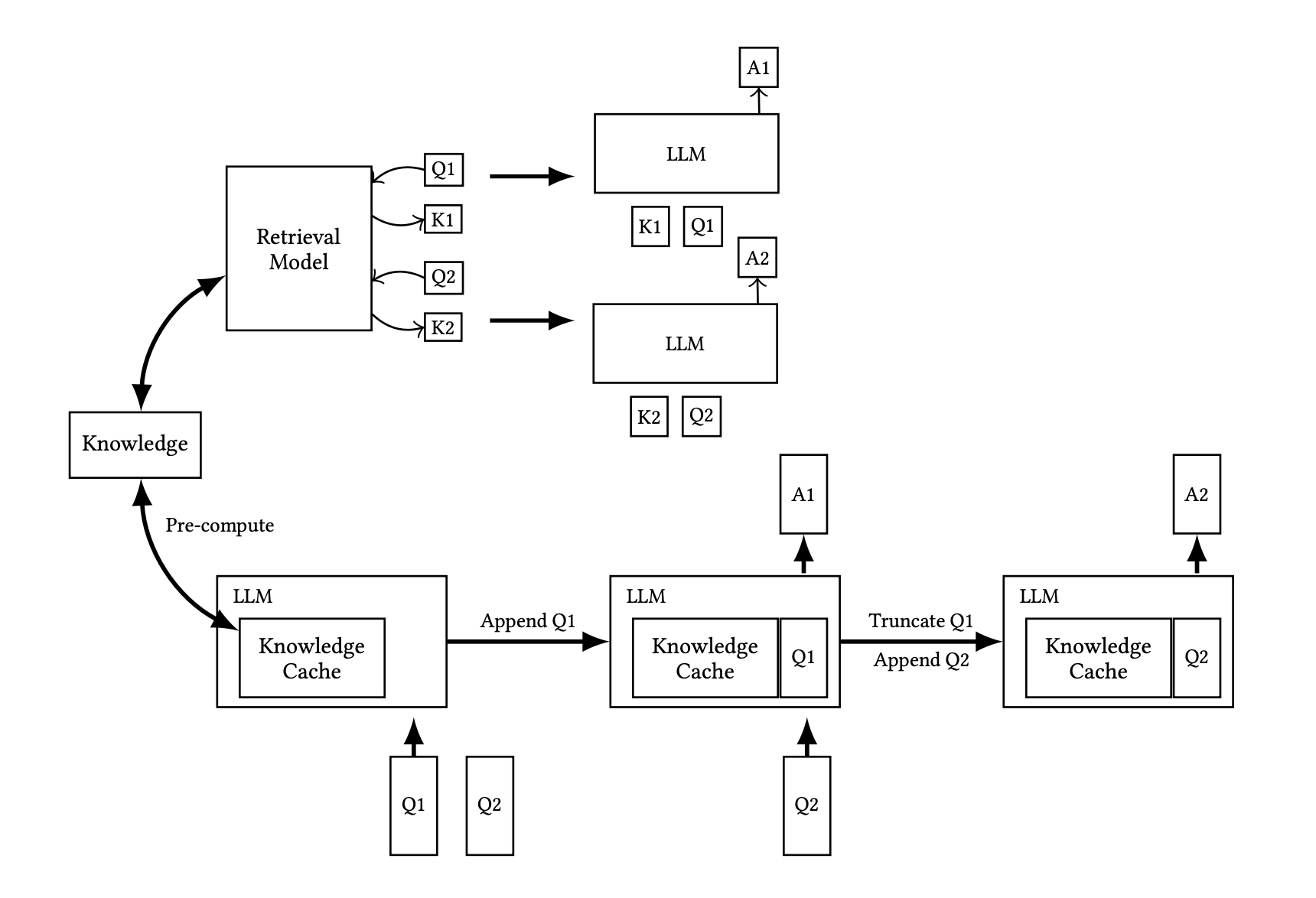

In the ever-evolving landscape of language models, Retrieval-Augmented Generation (RAG) has stood out as a formidable approach, enhancing models by integrating external knowledge sources. However, RAG is not without its challenges, including retrieval latency, retrieval errors, and increased system complexity. Enter Cache-Augmented Generation (CAG), a promising alternative that seeks to overcome these hurdles by eliminating the need for real-time retrieval.

What is Cache-Augmented Generation (CAG)?

CAG leverages the extended context windows of modern large language models (LLMs) by preloading all relevant resources into the model’s context and caching its runtime parameters. This innovative approach allows the model to generate responses directly during inference, bypassing the need for real-time retrieval.

Source: https://arxiv.org/html/2412.15605v1

Source: https://arxiv.org/html/2412.15605v1

The Advantages of CAG

-

Reduced Latency: By eliminating real-time retrieval, CAG significantly speeds up inference, making it a more efficient option.

-

Improved Reliability: With CAG, retrieval errors are minimized, ensuring that the context remains relevant and accurate.

-

Simplified Design: CAG offers a streamlined, retrieval-free alternative to RAG, achieving comparable or even superior results with less complexity.

Understanding the Limitations of CAG

While CAG presents numerous advantages, it is not without its limitations:

-

Limited Knowledge Size: CAG requires the entire knowledge source to fit within the context window, which can be a constraint for tasks involving extremely large datasets.

-

Context Length Constraints: The performance of LLMs may degrade with very long contexts, posing a challenge for CAG.

The author delves into the relationship between model performance and context length, offering insights into scenarios where CAG truly excels.

The Future of CAG

The limitations of CAG are rapidly being addressed by advancements in LLMs, which now feature longer context windows and improved capabilities for extracting relevant information from extended inputs. As these models continue to evolve, CAG is poised to handle increasingly complex applications, making it a practical and scalable alternative to traditional RAG.

In conclusion, Cache-Augmented Generation represents a significant step forward in the quest to enhance language models. By addressing the challenges of RAG and capitalizing on the strengths of modern LLMs, CAG offers a compelling solution for the future of language model enhancement. As technology progresses, we can expect CAG to play an increasingly vital role in the development of sophisticated, efficient, and reliable language models.

References:

- https://arxiv.org/html/2412.15605v1

- https://github.com/hhhuang/CAG/tree/main

- https://www.youtube.com/watch?v=NaEf_uiFX6o